If you’re very familiar with the scientific method, skip down to part two.

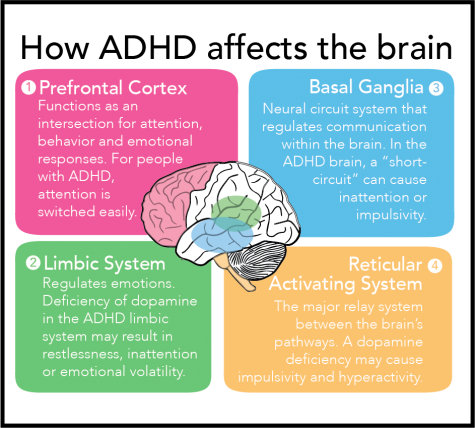

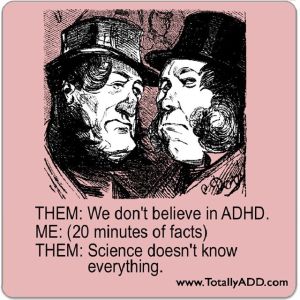

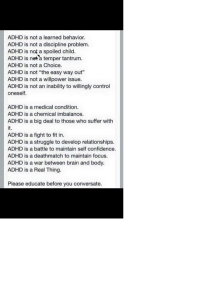

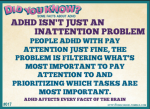

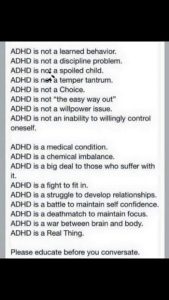

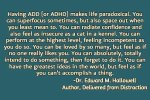

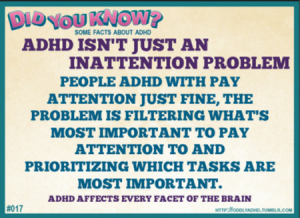

Many of us are interested in research on ADHD. It’s important to understand what’s going on.

Part One: The Scientific Method

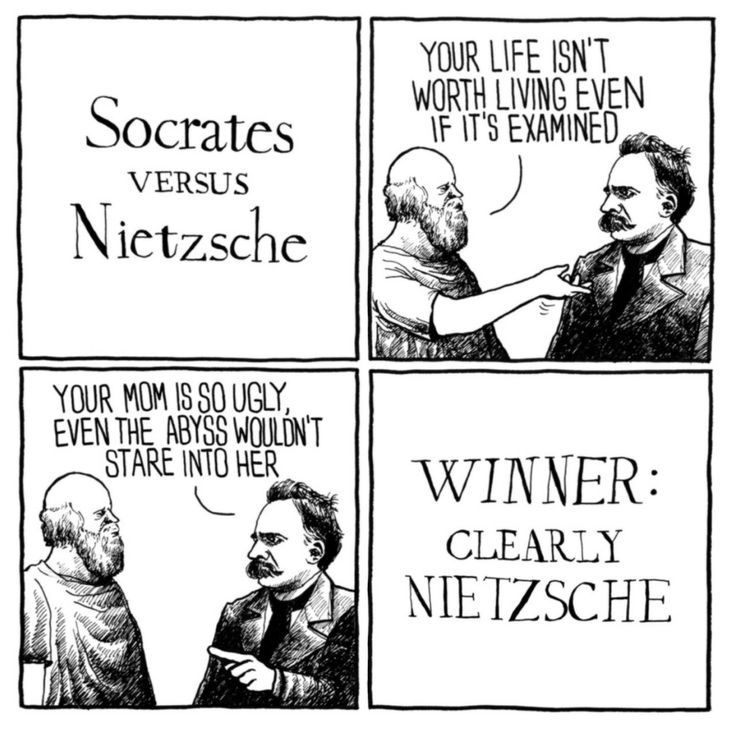

1. A researcher states a hypothesis, which could be proven not true.

(For example, “There is no God,” or, “There is a God;” neither statement could be proven not true and therefore scientific research cannot address them. “Medication A gives better results than placebo” could be proven false and so is testable.)

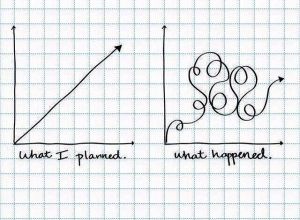

2. The researcher records the plan of the research, the outcomes are to be measured and the statistics to be used. (Sometimes researchers go back after-the-fact come up with different questions or approaches using the same data This is a very questionable practice.)

3. The research is done. For testing medicines, the gold standard is the randomized double-blind placebo-controlled trial, meaning a group of control subjects get a placebo and the experimental group receives the medicine to be tested. Double-blind means that neither the researchers nor the subjects know which the subject is receiving.

Randomized means the subjects are assigned to either the control or active group at random. In good studies, after this is done, the two groups are compared with each other to see that their characteristics are about the same, that no big differences in the groups, like ages, for example, had occurred by chance.

The gold standard is not always possible. For example, one study of school children at risk for dropping out compared a group who received one hour a week help from a mentor with a control group who did not. Couldn’t be double-blind, but the researchers who measured the results could be unaware of which group each child was in. The hypothesis that the mentored group would do better was proven.

4. For good studies, to reduce the chance of getting a result purely by chance and not a true result, large groups are needed. When using statistics, the larger the group, the more powerful the statistics will be.

4. The statistical method is chosen before the research is done. There are many different statistical approaches and different ones are more appropriate for different kinds of studies. The most common is the p-value, which indicates the chance that the result could’ve been totally due to chance. If the p is less than .05, the result can be called “statistically significant,” but most people prefer less than .01, which means that less than once out of a hundred times the results could have been due to chance. The smaller the p-value, the more likely the findings are to be valid.

Again, there are many different ways of calculating results, such as “number needed to treat, “meaning the number of patients who would need to receive the medicine before one patient would do better than someone on placebo. Obviously, you want a small number.

5. The paper is written with a description of the research method, the statistics used, and the findings. It is submitted to a reputable scientific journal and then peer-reviewed, reviewed by several experts in the field who indicate if it is valid, worth publishing, or needs improving.

6. If published, the research or experiments need to be repeated by different researchers in different laboratories using the same approach to see if they come out with the same results.

6. If the results are duplicated then “science” generally will accept the findings as accurate – “science says'” or “research shows.” We don’t put much stock in a single study finding, not duplicated.

Part Two: Problems with the scientific approach

There are many ways science can go wrong, as illustrated by the varying year-by-year changes in dietary recommendations. Experiments need to be properly designed, properly run, honestly reported, peer-reviewed and duplicated.

- In many medication trials, the average benefit for all the experimental group is not significant enough and the medication is dropped, but there may be a small group of people who did benefit but usually these are not tested further.

- Doing studies is expensive, and many are funded by drug companies, which seems to risk some bias in the designing, the interpretation and possibly even in the results and in which studies are published.

- For many studies the results are negative, meaning not statistically significant, and these are rarely published although they could be scientifically useful.

- Statistically significant does not always mean clinically significant.

- In recent years, the number of people who respond to placebo has risen, making it harder to prove a medication effective, and some that might be are dropped. The reasons for this are not clear. Could it relate to #6? (The placebo effect is powerful, but benefits tend not to last. )

- Some people fake their information to get into studies for the money or other benefits.

- For many trials, it is difficult to recruit enough subjects to get good results.

- Some studies use a placebo group in problems, like schizophrenia, where this can be harmful to the subjects. I think this is unethical.

- All of these problems are being addressed in recent years, and the science is improving.

Part Three: Comments

I have mostly used medication testing as the example in this post, but the scientific method applies to all research.

I find the statistics complicated and confusing, I don’t understand them, and some of what I have just told you may be wrong, but the general idea is correct anyway.

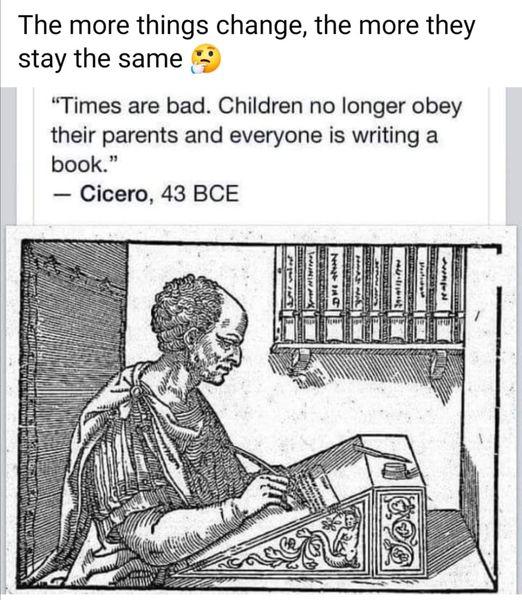

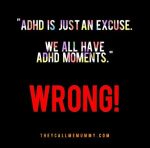

I view most scientific results with a certain degree of skepticism, and some of them I don’t believe even if they seem to have gone through this process properly. This is presumably an example of confirmatory bias, the logical fallacy where we collect data that agree with our preconceived notions and reject those that go against them. It is hard to avoid or to shake.

Overall, I think it is much wiser and safer to go with properly done scientific findings, even though they may turn out to be wrong, rather than intuition, hunches, prejudices, biases, or conspiracy theories. The odds are in favor of the science.

In my posts, I try to make clear when I’m stating my opinion versus when I’m stating accepted scientific findings or fact. Please catch me and comment when I fail to do this.

doug

Heads Up O the Day:

I plan for the next post to expand on this, but this one is already too long (right, Martha?)

I think it will be interesting.

Request O the Day:

Some of you surely know more about this, especially statistics, than I do. Please comment, correct me, or argue.

Links:

The Scientific Method

Opinion

Confirmatory Bias

Mr Bean

@addstrategies #adhd #add @dougmkpdp

ADHD and OCD?

living with ADHD

living with ADHD

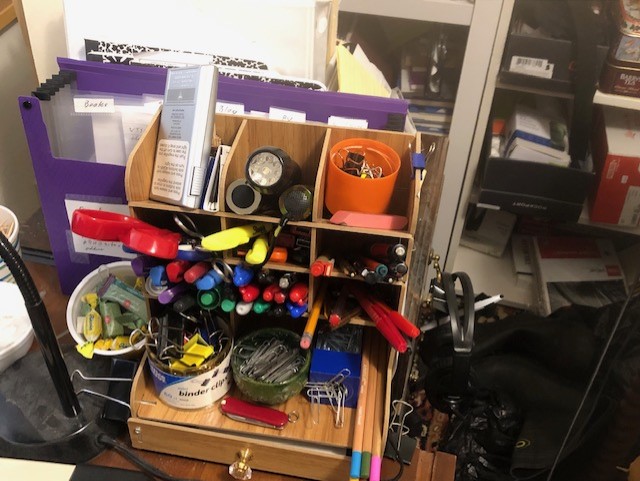

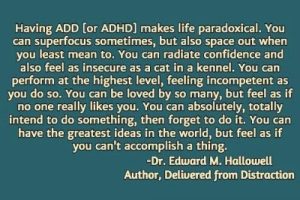

I definitely recognise myself in the “not being able to keep the house clean & tidy” bit. I live in creative chaos. And when things get out of sight, they get out of mind. So books I’m reading and want to read, are piled everywhere. Projects and notebooks are always close by, too

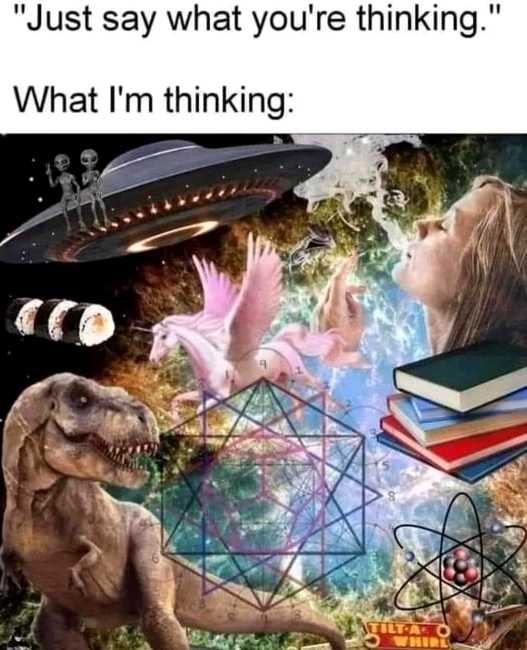

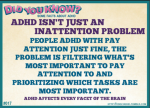

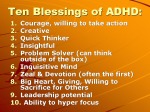

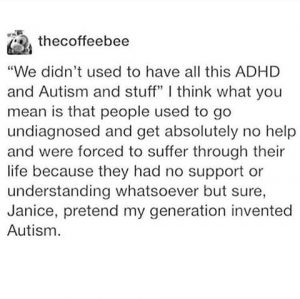

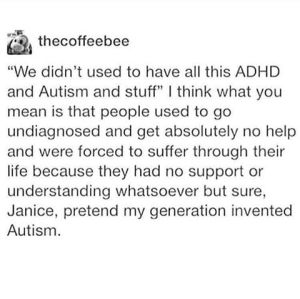

But I also want to reply about the hyperactive bit. Because that’s something I had a discussion about with my psychiatrist back when I got diagnosed with ADHD (and Autism). And I asked him : “don’t you mean I have ADD? Because I’m not hyperactive”. And he pointed out, that hyperactivity doesn’t necessarily have to be shown Outwards. You can also be Hyperactive inside (mind & body). Feeling restless, have a racing mind. Which I found a very interesting argument. Because my biggest ADHD problem might just be my insanely busy brain. There is no brake or stop button. It just goes, full time and non stop

In reply to doug with ADHD.

I recognise what you mean with the flywheel, I think. It mostly shouts a list of tasks and projects I should be doing, and a list of things I really shouldn’t forget. Which makes sitting still very hard. Like you said, meditation has also helped me in the past. But for some reason I can just never stick with it. Even though I know it helps, I just can’t get myself to sit down and do it. Because *makes chaotic and dramatic arm gestures* “The things! All the things that need to be done!” xD

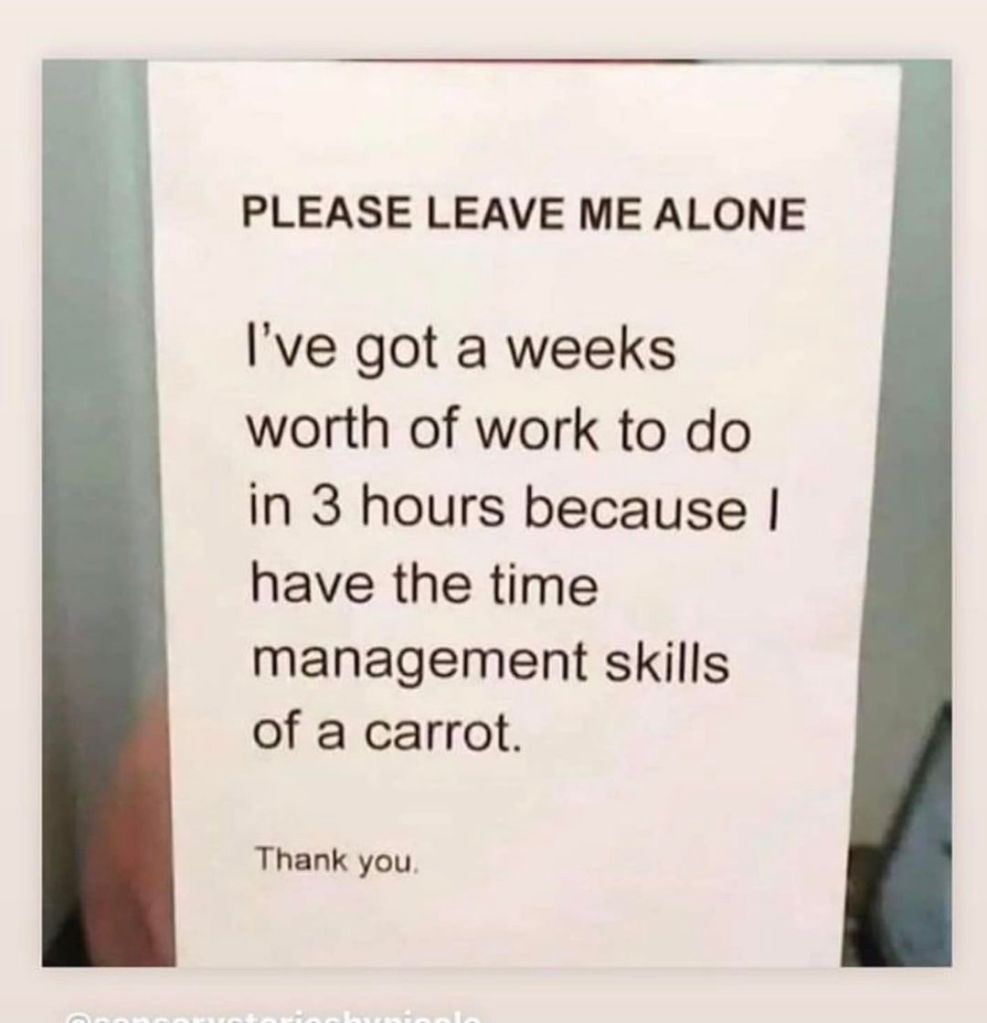

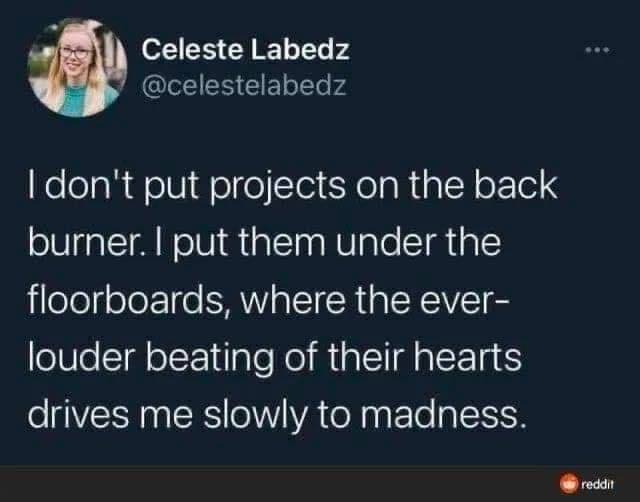

I also find it hard to finish things. Especially books or projects. Sometimes I can enjoy something so much, my Hyperfocus takes over (I have ADHD & Autism. My hyperfocus can be strong xD), and I finish it in no time. But a lot of the times I get bored, or something new distracts me. Which leads to reading 20 books at once, with usually about 3 or 4 actively (in turns), and about 20 craft projects. Also a few active ones. I struggle with it more, now that I’m trying to start up a small business. And having projects finished frequently (and on time) is kind of a must. So I hope I can work on it, by challenging myself (setting goals to make it interesting), and by taking my meds on time – every time.

Do you struggle with this too?

creatievecreatiesnl.wordpress.com

creatievecreaties@outlook.com

84.86.180.102

i also share “If I can’t see it, it doesn’t exist.”

doug

Links: –

A Follow Up – How to recognize ADHD in girls

James Clear

I just started on meds, and—

Deficient Emotional Self-Regulation: ADHD Webinar

ADHD makes it hard to prioritize, make decisions, choose, select, not over extend, edit, and some other things too

#ADHD, #adultADHD, @dougmkpdp, @addstrategies, @adhdstrategies

ADHD Frantic!